OpenAI Orion-1 : raisonnement ou mémorisation ?

Introduction and Motivation

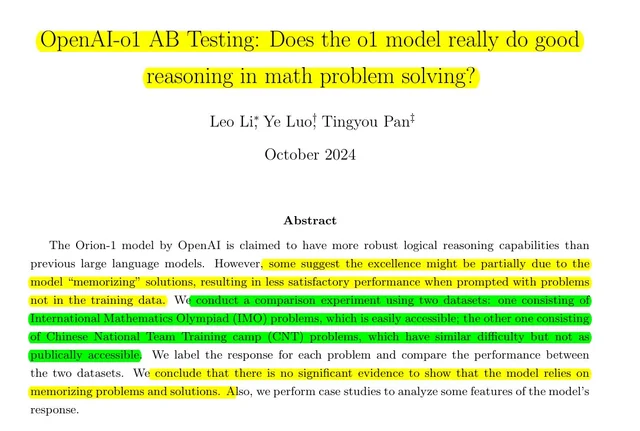

Recently, OpenAI has unveiled a new large language model called “o1” or “Orion-1,” which boasts impressive logical reasoning capabilities, particularly in solving challenging mathematical problems. This model raises questions about the extent of its reasoning abilities and the influence of memorization on its performance.

Background and Previous Work

Research on large language models (LLMs) and their mathematical reasoning skills has evolved from elementary to Olympiad-level mathematics. The o1 model is designed to encourage genuine reasoning patterns, showcasing advancements in this field compared to earlier models like GPT-4.

Methodology

The authors of the study constructed two datasets of challenging math problems: one from the International Mathematical Olympiad (IMO) and another from the Chinese National Team (CNT) training camp. By evaluating o1-mini’s performance on these datasets, the authors aim to determine whether the model’s success is based on reasoning or memorization.

Results and Statistical Analysis

After evaluating 120 problems from both datasets, the study found that o1-mini’s performance did not significantly differ between the widely accessible IMO problems and the less accessible CNT problems. This indicates that the model’s abilities are rooted in reasoning rather than memorization.

Qualitative Observations and Case Studies

Case studies revealed how o1-mini approaches problem-solving, showcasing its strengths in providing intuition for complex problems but also its weaknesses in formal mathematical proofs and complex search strategies.

Discussion and Implications

The study’s findings contribute to understanding LLMs’ mathematical reasoning capabilities. While o1 shows promising progress in generalizing reasoning skills, it still falls short of human-like rigorous mathematical reasoning. The results call for further refinement in training methods to enhance the model’s problem-solving capabilities.

Conclusion

The study concludes that the OpenAI o1 model demonstrates genuine reasoning abilities, as shown by its consistent performance across different datasets. While the model still lacks complete rigor in its reasoning, it shows potential for further development towards more reliable proof assistance and problem-solving capabilities.

Source : kingy.ai